It doesn’t take more than a quick glance to any major news site these days to run into an article expressing gloom and doom about the looming chip shortage. There is indeed cause for concern from a number of different vantage points. From cars to smartphones to cloud storage, everyone feels the impact of a disrupted semiconductor supply chain. Artificial intelligence stands on increasingly shakier ground these days, as the GPU-powered models require massive amounts of computation in order to achieve their dazzling performance. This level of demand threatens to bring our reliance on the global supply chain — from minerals all the way to the finished chips — to unsustainable levels. We believe that AI optimization is the solution to this crisis.

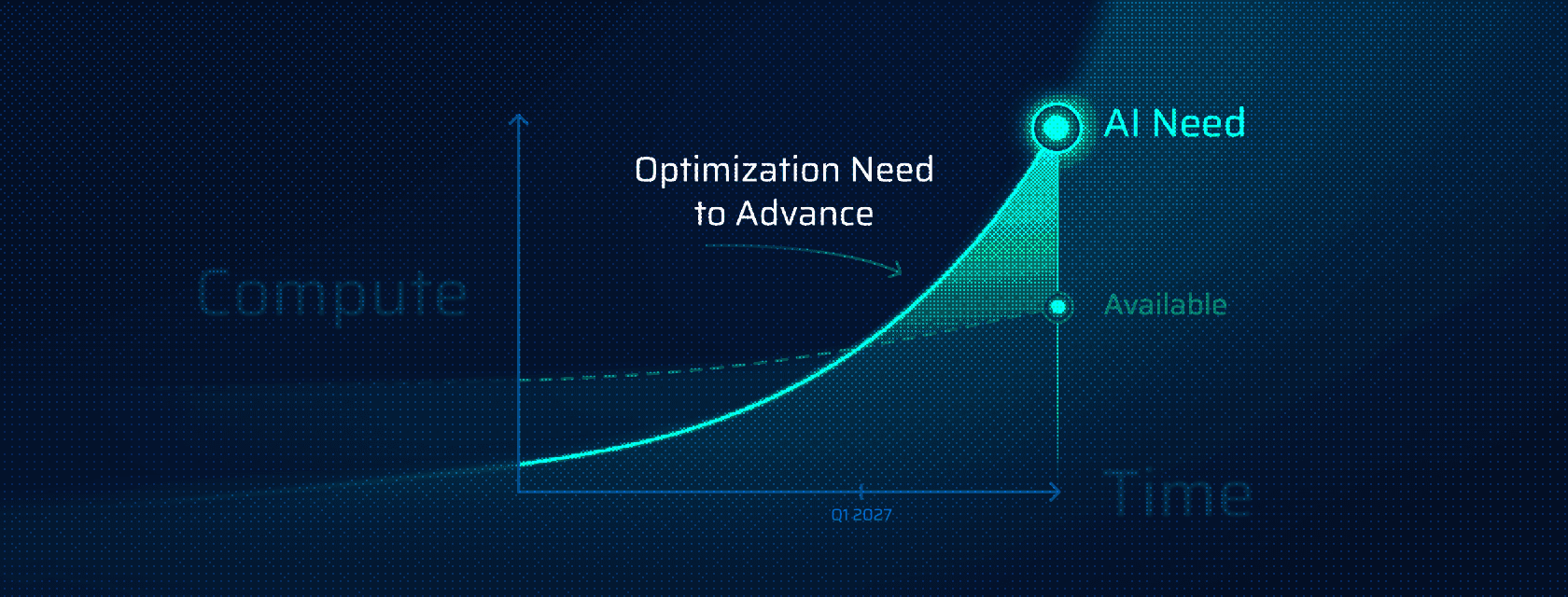

Training modern AI systems often involves thousands of GPUs running for days (or even weeks). Even after initial deployment, performing the necessary computation at scale requires ongoing access to specialized hardware. As things currently stand — where supply chains are fragile and chip production has physical limits — demand will continue to exceed supply. If AI is to remain accessible and scalable, the industry must shift its focus from newer, better hardware to smarter, more efficient design.

Optimizing AI models is the best path forward. A number of techniques (like model pruning, quantization, and knowledge distillation) allow developers to shrink models significantly without significant trade-offs in accuracy. At Qwerky, our proprietary optimization processes are being designed with efficiency in mind from the start. Instead of relying on the newest and strongest chips, our approach achieves strong performance with fewer parameters and lower compute requirements. This trend toward leaner models is critical not just for conserving resources, but also for expanding access to AI in regions where high-end hardware is less accessible.

Another promising way forward lies in hardware-agnostic optimization techniques — approaches that ensure AI models perform efficiently across a wide range of devices, regardless of the underlying chip architecture. Instead of building exclusively for one manufacturer’s GPUs, we at Qwerky are adopting frameworks and strategies that abstract away from particular hardware dependencies. Customized model solutions, for instance, can adapt performance based on available resources, whether it's a smartphone CPU, the cloud or on-site.

Ultimately, optimizing AI is about doing more with less and setting ourselves up for future resiliency. It’s a chance to make AI more more adaptable and leaner, enabling broad solutions across any infrastructures. It’s also a way to reduce the costs of the physical production itself, resulting in higher performance for less compute and less energy. The global chip shortage should serve as a canary in the coalmine that reminds us that technological progress is ultimately constrained by physical limitations. But by focusing on smarter, not just bigger and better, we can ensure that there is a path forward that is not limited to an environment where keeping up with the newest hardware is the only game in town.