Subscribe to our newsletter for the latest updates, tutorials, and QWERKY news.

Latest news, announcements, and updates from the QWERKY team

How Qwerky brought Mamba state space models to Modular’s MAX framework—building cross-vendor, CPU-only SSM kernels and laying the foundation for QWERKY’s optimized architectures.

QWERKY AI announces partnership with Inbox Beverage to build an AI-powered design and customer engagement platform using a custom-trained 8 billion parameter state space model. The platform will transform how prospective customers explore brewery projects while providing ongoing operational support, demonstrating how growth-stage companies can deploy enterprise-grade AI without prohibitive infrastructure costs. Launch expected Q1 2026.

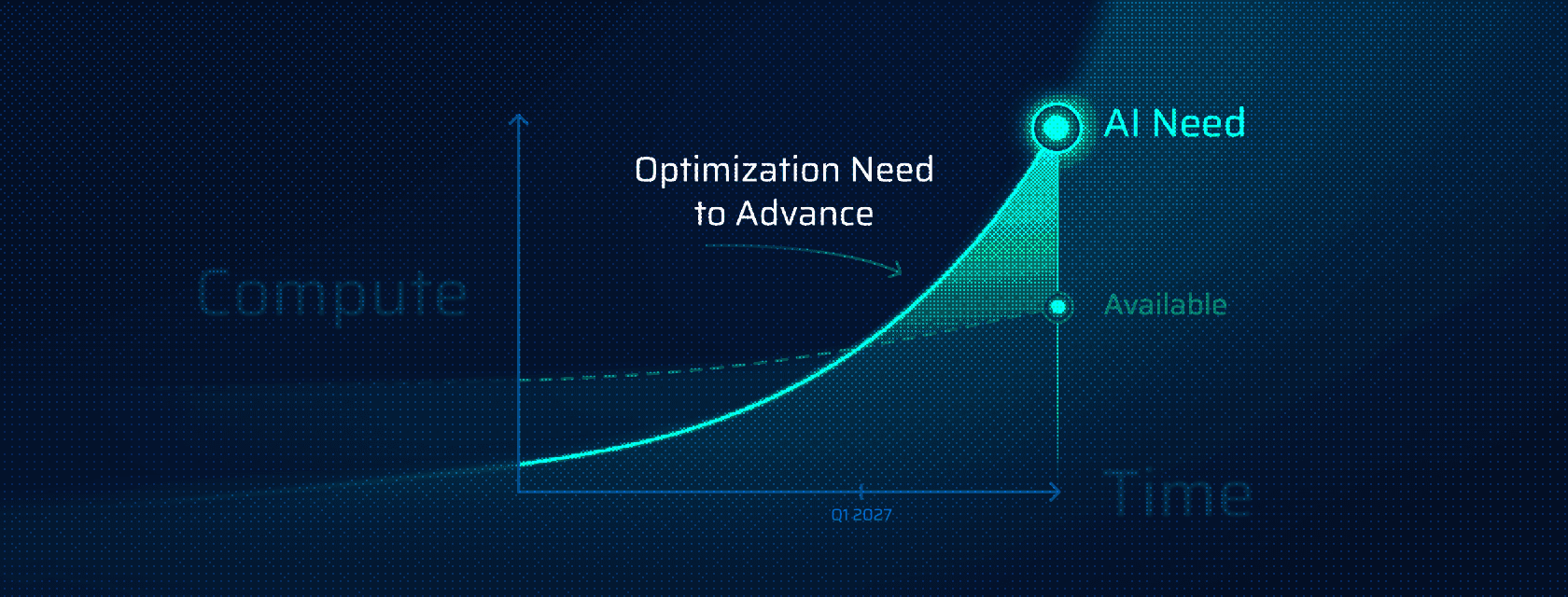

If AI is to remain accessible and scalable, the industry must shift its focus from newer, better hardware to smarter, more efficient design.

September saw the team at Qwerky expand in a very exciting way: with our new server, stocked with 8 NVIDIA RTX Pro 6000 Blackwell Max-Q Workstation Edition cards!

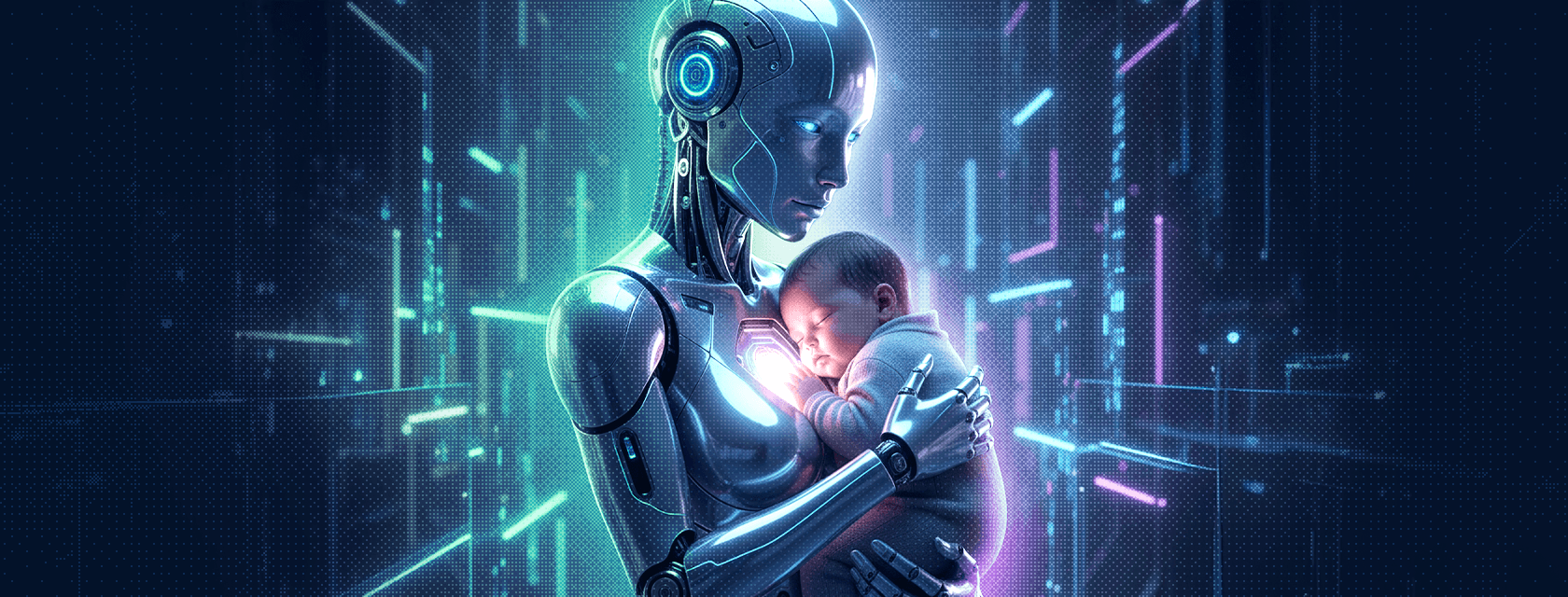

For decades, researchers have chased the fantasy of superintelligent systems that are smarter, more capable, and perhaps even godlike. Does this mean we are on the precipice of domination by machines?

In our third (and final) part, we’ll turn our attention to three novel ways of combining these two approaches and see how they attempt to address these issues.